This opt-output rack I bought from China cost less than $17. They used to cost an order of magnitude more than that. It is kind of sad we do not make them so much anymore in the US, but it is a very good thing they are available at so a low price and that we do not have to make them as low cost items with thin profit margins. This is the last piece of hardware I needed for hooking up the control to our coffee bean sorting project, but I have had two other projects take priority (involving clinical trials and compliance issues–that is a good thing because the trials and compliance issues would not be needed if the product did not work). So I am going to have to sit and just look at this fun new toy for a month or two before I can hook it up and make it do its thing.

This opt-output rack I bought from China cost less than $17. They used to cost an order of magnitude more than that. It is kind of sad we do not make them so much anymore in the US, but it is a very good thing they are available at so a low price and that we do not have to make them as low cost items with thin profit margins. This is the last piece of hardware I needed for hooking up the control to our coffee bean sorting project, but I have had two other projects take priority (involving clinical trials and compliance issues–that is a good thing because the trials and compliance issues would not be needed if the product did not work). So I am going to have to sit and just look at this fun new toy for a month or two before I can hook it up and make it do its thing.

Category: Technology Page 3 of 9

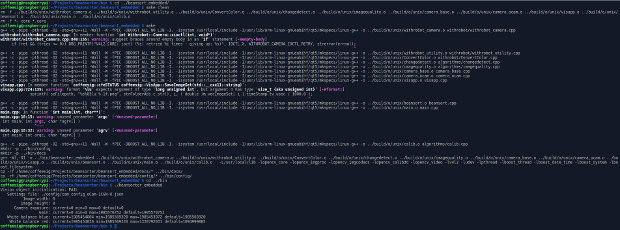

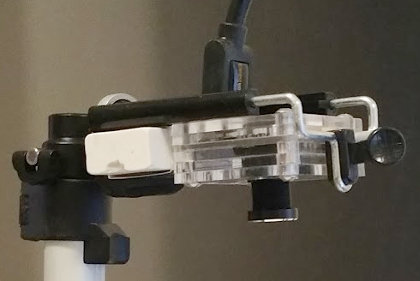

Gene sent me the first camera stand and bracket today. I am actually to the point now where I have enough mechanical items to start doing some more bean testing, but as I feared, I have now become the bottleneck. I have decided Flask and Python are probably not the tools I want to use for the machine interface server, so I am switching over to Node.js. I am trying out the Visual Studio Code IDE to develop the app. So far (which is not very far), I am very impressed with it all and think it will make the whole enterprise easier to develop, deploy, maintain and extend. I might change my mind after I get a little deeper, but so far so good.

Gene sent me the first camera stand and bracket today. I am actually to the point now where I have enough mechanical items to start doing some more bean testing, but as I feared, I have now become the bottleneck. I have decided Flask and Python are probably not the tools I want to use for the machine interface server, so I am switching over to Node.js. I am trying out the Visual Studio Code IDE to develop the app. So far (which is not very far), I am very impressed with it all and think it will make the whole enterprise easier to develop, deploy, maintain and extend. I might change my mind after I get a little deeper, but so far so good.

Thanks Gene!

The indicator light tower for the bean sorting project arrived today. Really nice, but really cheap, too. I hope it works when I hook it up. Honestly, I had gotten pretty burnt out on all the user interface programming I was doing and, combined with a ton of stuff going on at my day job and a trip to Canada, I was getting a little weary and was planning to take a break. Then, some new hardware came in the mail and I realized I was not going to be able to hook it up and get it going until I finish with some UI enhancements, bug fixes, and robustification.

The indicator light tower for the bean sorting project arrived today. Really nice, but really cheap, too. I hope it works when I hook it up. Honestly, I had gotten pretty burnt out on all the user interface programming I was doing and, combined with a ton of stuff going on at my day job and a trip to Canada, I was getting a little weary and was planning to take a break. Then, some new hardware came in the mail and I realized I was not going to be able to hook it up and get it going until I finish with some UI enhancements, bug fixes, and robustification.

There is nothing like new toys for motivation for an engineer and this definitely qualifies. So, today, I am reinvigorated and will dive back into the UI so I can move on to the fun stuff. In the meantime, we are putting together a marketing survey of a large group of potential users of this product. Actually, there is value in making this thing (for me anyway) outside of having a market for it. I am learning a ton, having fun and putting together the structure I need to complete other projects of a similar nature with my buddy John.

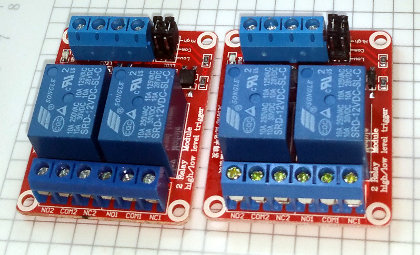

It was a long and busy day today. I expected it to slow down, but work poured in right at quitting time and I am just finishing up what was possible to finish. The good news is that the relays to control the LED’s for the bean sorter project arrived today. They are small, cheap and should be perfect for this project, but not as the strobe control I/O’s we need. They are mechanical relays that can switch at a maximum rate of 10ms to active and 5ms to inactive. That is way to slow for what we want to do. The good news about the bad news though is that I thought I was only getting one board, but I got two and I will be able to use them to do a lot of the development work while I wait for some faster solid state switches AND I will be able to use them in the product to control the indicator lights that show machine status.

It was a long and busy day today. I expected it to slow down, but work poured in right at quitting time and I am just finishing up what was possible to finish. The good news is that the relays to control the LED’s for the bean sorter project arrived today. They are small, cheap and should be perfect for this project, but not as the strobe control I/O’s we need. They are mechanical relays that can switch at a maximum rate of 10ms to active and 5ms to inactive. That is way to slow for what we want to do. The good news about the bad news though is that I thought I was only getting one board, but I got two and I will be able to use them to do a lot of the development work while I wait for some faster solid state switches AND I will be able to use them in the product to control the indicator lights that show machine status.

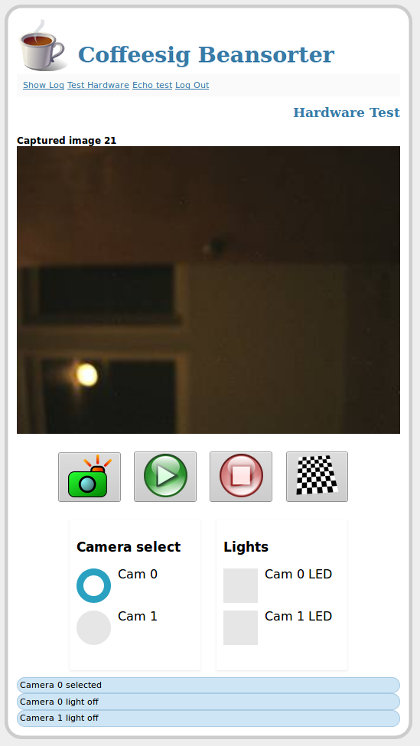

The browser based GUI for the bean sorting project is now up and running and being served from the Raspberry Pi. I only have one camera running right now because I only have one camera, but it does all the things that need to be done. There is a lot underneath the hood on this thing, so it should serve as a good base for other embedded machine vision projects beside this one.

The browser based GUI for the bean sorting project is now up and running and being served from the Raspberry Pi. I only have one camera running right now because I only have one camera, but it does all the things that need to be done. There is a lot underneath the hood on this thing, so it should serve as a good base for other embedded machine vision projects beside this one.

In terms of particulars, I am using a Flask (Python3)/uWSGI/nginx based program that runs as a service in the Raspberry Pi. Users access this service wirelessly (anywhere from the internet). The service passes these access requests to the C++/OpenCV based vision application which is also running as a service on the Raspberry Pi. Currently, we can snap images show “live” video, read the C++ vision log, and do other such tasks. We probably will use something other than a Raspberry Pi for the final product with a USB 3.0 port and the specific embedded resources we need, but the Raspberry Pi as been great for development and will do a great job for prototypes and demonstration work.

The reason I put the “live” of “live” video in scare quotes is that I made the design decision not to stream the video with gstreamer. In the end applications I will be processing 1 mega-pixel images at 20-30 frames per second which is beyond the bandwidth available for streaming at any reasonable rate. The purpose of the live video is for camera setup and to provide a little bit of a reality check at runtime by showing results for each 30th to 100th image as a reality check along with sort counts. There is no way we could stream the images at processing rates and we want to see something better than the degraded streamed image.

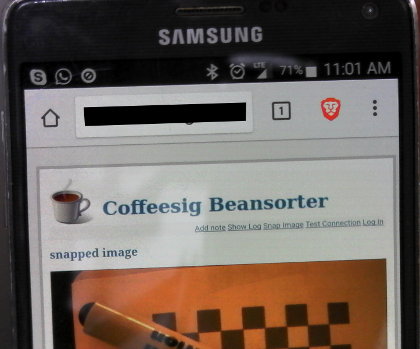

After about a gazillion fits and restarts associated with reading the manual and getting syntax and basic concepts wrong, I have finally gotten the bean sorter web interface up and running. The idea is to be able to control the bean sorter vision system from a cell phone, tablet, or PC. I have been going around in circles for about two weeks now, but it appears I am ready to move on to the next thing. Here is a shot of my phone accessing the site from outside the LAN. I am glad to be done with this. The next step is to not just load a previously captured image, but to allow users to capture images by clicking a link.

After about a gazillion fits and restarts associated with reading the manual and getting syntax and basic concepts wrong, I have finally gotten the bean sorter web interface up and running. The idea is to be able to control the bean sorter vision system from a cell phone, tablet, or PC. I have been going around in circles for about two weeks now, but it appears I am ready to move on to the next thing. Here is a shot of my phone accessing the site from outside the LAN. I am glad to be done with this. The next step is to not just load a previously captured image, but to allow users to capture images by clicking a link.

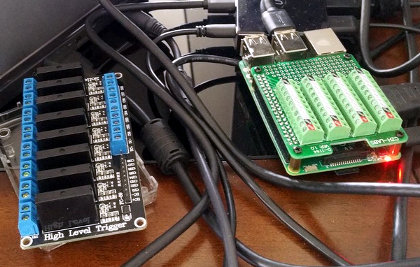

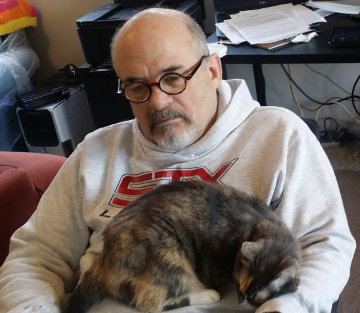

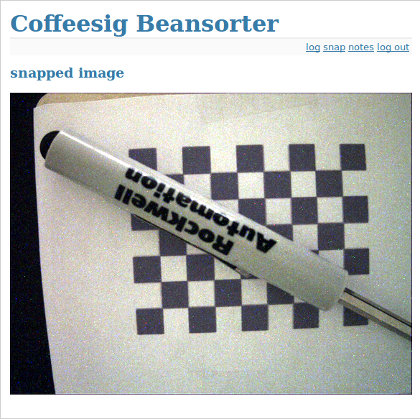

Kiwi and I continue to program hard on our Raspberry Pi and coffee bean project. You can see from the image at the right that we made a small breakthrough. The capture control is now on the Raspberry Pi as well as the ability to display the last image via the web. I suppose it is not such a big deal, but since I have generally worked on image processing algorithm development and the analytics that go along with that, I am not such a great web programming. It has been frustrating but fun to work through getting the syntax and understanding the idiosyncrasies of embedded web servers and programming tools. Nevertheless, I have the main part of the start of the struggle behind me

Kiwi and I continue to program hard on our Raspberry Pi and coffee bean project. You can see from the image at the right that we made a small breakthrough. The capture control is now on the Raspberry Pi as well as the ability to display the last image via the web. I suppose it is not such a big deal, but since I have generally worked on image processing algorithm development and the analytics that go along with that, I am not such a great web programming. It has been frustrating but fun to work through getting the syntax and understanding the idiosyncrasies of embedded web servers and programming tools. Nevertheless, I have the main part of the start of the struggle behind me  and look forward to returning focus to the application at hand. The camera is set up with the calibration target underneath. The next step will be to create a way for the web server to talk to the camera control program so a user can capture an image on demand, calibrate the system, run a bunch of beans, look at logs to assure everything is working right, etc. etc. I have done more of that kind of thing than web programming, but I am sure there is a lot of minutiae that I have forgotten.

and look forward to returning focus to the application at hand. The camera is set up with the calibration target underneath. The next step will be to create a way for the web server to talk to the camera control program so a user can capture an image on demand, calibrate the system, run a bunch of beans, look at logs to assure everything is working right, etc. etc. I have done more of that kind of thing than web programming, but I am sure there is a lot of minutiae that I have forgotten.

After a struggle that took way longer than it should, I was able to get the Raspberry Pi ready for development. It is no exposed to the outside world so our partners in Texas and our mechanical guru in Montana can access the Raspberry Pi from their cell phones, tablets or other connected devices. The web page is just a place holder for other stuff we will do it, but everyone needs a login page.

After a struggle that took way longer than it should, I was able to get the Raspberry Pi ready for development. It is no exposed to the outside world so our partners in Texas and our mechanical guru in Montana can access the Raspberry Pi from their cell phones, tablets or other connected devices. The web page is just a place holder for other stuff we will do it, but everyone needs a login page.

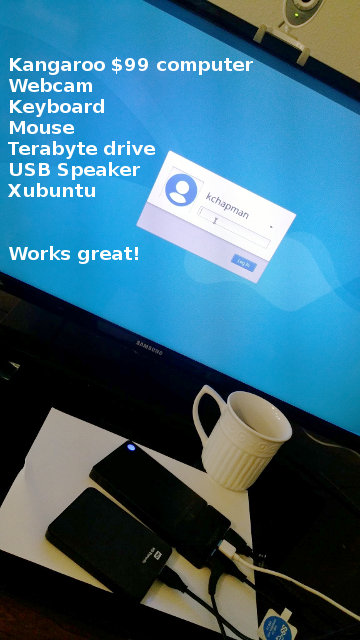

The old laptop Lorena used as her main computer died yesterday, so in the middle of my day job and bean project, I got to build her a new computer. I have to admit I had quite a bit of fun with it. It is based around a Kangaroo $99 computer with an Atom processor, bluetooth, wifi, etc. The computer is that is the smart phone shaped thing between the coffee cup and the terabyte hard drive. We have the following things connected:

The old laptop Lorena used as her main computer died yesterday, so in the middle of my day job and bean project, I got to build her a new computer. I have to admit I had quite a bit of fun with it. It is based around a Kangaroo $99 computer with an Atom processor, bluetooth, wifi, etc. The computer is that is the smart phone shaped thing between the coffee cup and the terabyte hard drive. We have the following things connected:

- Monitor

- Mouse

- Keyboard

- 1 TB drive

- Webcam

I ordered a cheap USB speaker and will get a microphone so we can use the system for Skype. It hooked up to the wireless internet with no problem. We installed Xubuntu on it which is a lightweight version of Ubuntu. It is actually pretty snappy and just what Lorena needs to browse the web. I kind of wish I had one myself. I will just have to be satisfied with my Raspberry Pi.

Got the bean sorter program running on the Raspberry Pi. It was WAY less trouble than I expected. I am not sure whether it is because I have more skills than before or they have just made it all easier. Probably a little of both. Next task is to disconnect the camera from my development computer and try to get it going on the RPi.

Update: Recognizing the camera, but taking black images so far. Giving up for the night.

The other thing that arrived today is my new diet Logitech H800 Bluetooth/Wireless headset. It allows me to connect it to my computer with their little custom wireless dongle and to my telephone via Bluetooth. The reason it is a diet headset is that it allows me to not be tethered to my desk while I am talking on the phone or via skype. That is a FINE thing because I get in extra steps and it allows me to either eat more or drop weight more or both.

The other thing that arrived today is my new diet Logitech H800 Bluetooth/Wireless headset. It allows me to connect it to my computer with their little custom wireless dongle and to my telephone via Bluetooth. The reason it is a diet headset is that it allows me to not be tethered to my desk while I am talking on the phone or via skype. That is a FINE thing because I get in extra steps and it allows me to either eat more or drop weight more or both.

Things are happening fast and furious with the bean sorter project. The pre-prototype lights Gene made for me arrived. They are just perfect. He had them all hooked up so all I have to do is flip a switch. In addition, two light controllers arrived in a separate package. Both of the are Pulse Width Modulated dimmers, but one is controlled manually with a dial and the other is controlled digital via a computer–in this case, a RaspberryPi. Can hardly wait for the weekend to see if I can get the control part of this thing going.

Things are happening fast and furious with the bean sorter project. The pre-prototype lights Gene made for me arrived. They are just perfect. He had them all hooked up so all I have to do is flip a switch. In addition, two light controllers arrived in a separate package. Both of the are Pulse Width Modulated dimmers, but one is controlled manually with a dial and the other is controlled digital via a computer–in this case, a RaspberryPi. Can hardly wait for the weekend to see if I can get the control part of this thing going.

Our bean sort project has heated back up–literally and figuratively. Gene finished the prototype light stands and shipped them out. Here is a picture of them working. They heat up a ton when they are under constant current, so I have ordered a manual PWM light driver (with a dial for brightness) and an PWM driver that I should be able to control with one of the DAC’s on the Raspberry Pi. All of that should arrive within the next few days which means I am the one who is holding everyone up because there are so many things that I have to do before I can test them. I can hardly wait to get them in my hands.

Our bean sort project has heated back up–literally and figuratively. Gene finished the prototype light stands and shipped them out. Here is a picture of them working. They heat up a ton when they are under constant current, so I have ordered a manual PWM light driver (with a dial for brightness) and an PWM driver that I should be able to control with one of the DAC’s on the Raspberry Pi. All of that should arrive within the next few days which means I am the one who is holding everyone up because there are so many things that I have to do before I can test them. I can hardly wait to get them in my hands.

In the mean time, I am working on the pixel to world coordinate calibration model for the beans. I hope to have that done sometime today–by Thursday at latest. Then I can work on controlling the lights and capturing images with the Raspberry Pi.

Gene and I continue to make progress on our bean inspection project. Here is the first pass at measuring bean size as beans drop past the camera. This includes finding the bean in the image, calculating its contour and measuring how big it is in the image. The next step is to convert the bean size in the image measured in pixel units to the size in millimeters. I am half way into that.

Gene and I continue to make progress on our bean inspection project. Here is the first pass at measuring bean size as beans drop past the camera. This includes finding the bean in the image, calculating its contour and measuring how big it is in the image. The next step is to convert the bean size in the image measured in pixel units to the size in millimeters. I am half way into that.

The other thing I did this weekend was load up a Raspberry Pi with the latest Raspbian OS and got it running on the network. Right now, I am doing all my work on my Linux PC, but the idea is to move it over to the Raspberry Pi as soon as it is a little further up the development path because that is a much cheaper computer. There are some other options that might be even better (cheaper and faster), but I have a Raspberry Pi, so that is where we will start.

Gene is not sitting still either. He has built me up some prototype lighting, but I will save that for a post of its own.

I bought a global shutter camera from Ameridroid for Gene’s and my new project. It is a pretty amazing little camera, especially for the price. It is a USB 3.0 so it runs fast. I do not have the lens I need for the application we are doing so I ordered a three lens kit (need it anyway). I hope to be able to start testing beans falling past the camera before the end of the holiday, but that might be a little ambitious.

I bought a global shutter camera from Ameridroid for Gene’s and my new project. It is a pretty amazing little camera, especially for the price. It is a USB 3.0 so it runs fast. I do not have the lens I need for the application we are doing so I ordered a three lens kit (need it anyway). I hope to be able to start testing beans falling past the camera before the end of the holiday, but that might be a little ambitious.

The other really good thing about this camera compared to the ov5640 cameras I have been using is that the Korean company, WithRobot, that makes the camera provides great, freely available libraries to control all the things the camera does. If I can get the camera control into our proto-type program, we will have made a major step in getting to the point where we can actually start developing a product.

The camera for the project Gene and I are working on arrived. It is pretty amazing. It cost literally 20% of the cost of what a camera with similar features would have cost only five years ago. I am dying to try it out, but in my normal bonehead manner of operation, I did not get the USB 3.0 cable I need to make it work. I was highly confident I had the right cable. It turns out I have a gazillion cables that are rapidly in the process of becoming obsolete and a gazillion more that ARE obsolete.

The camera for the project Gene and I are working on arrived. It is pretty amazing. It cost literally 20% of the cost of what a camera with similar features would have cost only five years ago. I am dying to try it out, but in my normal bonehead manner of operation, I did not get the USB 3.0 cable I need to make it work. I was highly confident I had the right cable. It turns out I have a gazillion cables that are rapidly in the process of becoming obsolete and a gazillion more that ARE obsolete.

Yesterday I bought a machine vision camera for the project my buddy Gene and I are doing to build a (semi-)cheap little machine to inspect coffee beans. We need something called a global shutter camera because the beans will be in motion when we capture their images. In the past a camera like this would have cost in the $1000 range. Over the years they dropped to $300-$400. Yesterday, I paid $135 for this camera–quantity 1–and that included shipping. If this is coupled with a Raspberry Pi and OpenCV (~$200 with a power supply, heat sink, and other necessary stuff), it is possible to build a vision system that is faster (by a lot) and smarter (by a lot) than the vision systems we used to sell when I started at Intelledex in 1983 for $30k (~$74k in today’s dollars). The upshot is that it is now possible to do tasks for cheap that no one would have ever thought possible. There are large categories of machine vision problems that companies are accustomed to paying through the nose to solve. That is truly not necessary anymore if one is smart enough to put the pieces together. I hope we are smart enough.

Yesterday I bought a machine vision camera for the project my buddy Gene and I are doing to build a (semi-)cheap little machine to inspect coffee beans. We need something called a global shutter camera because the beans will be in motion when we capture their images. In the past a camera like this would have cost in the $1000 range. Over the years they dropped to $300-$400. Yesterday, I paid $135 for this camera–quantity 1–and that included shipping. If this is coupled with a Raspberry Pi and OpenCV (~$200 with a power supply, heat sink, and other necessary stuff), it is possible to build a vision system that is faster (by a lot) and smarter (by a lot) than the vision systems we used to sell when I started at Intelledex in 1983 for $30k (~$74k in today’s dollars). The upshot is that it is now possible to do tasks for cheap that no one would have ever thought possible. There are large categories of machine vision problems that companies are accustomed to paying through the nose to solve. That is truly not necessary anymore if one is smart enough to put the pieces together. I hope we are smart enough.

A very cool new toy arrived at our door a couple of days ago. It is a camera copy stand that will hold a cellphone or other arbitrary item that fits a certain form factor. It will be invaluable in the bean sorting project, but I will be able to use it for a lot of other machine vision image capture testing tasks. We have made a lot of progress on the project and expect to start buying the actual cameras and lighting we hope to use within the next few weeks. The next difficult task is to drop the beans past a camera while we synchronize the capture and lighting of the scene so the bean is not blurry and we can see the surface of both sides very well. There are more tricky little things we need to do after that, but we cannot move forward until we know that is possible. As soon as that is figured out, I will see if Gene can help me set up a more permanent test fixture to systematically drop beans past the cameras so we can gather a test set from which to build a classifier.

A very cool new toy arrived at our door a couple of days ago. It is a camera copy stand that will hold a cellphone or other arbitrary item that fits a certain form factor. It will be invaluable in the bean sorting project, but I will be able to use it for a lot of other machine vision image capture testing tasks. We have made a lot of progress on the project and expect to start buying the actual cameras and lighting we hope to use within the next few weeks. The next difficult task is to drop the beans past a camera while we synchronize the capture and lighting of the scene so the bean is not blurry and we can see the surface of both sides very well. There are more tricky little things we need to do after that, but we cannot move forward until we know that is possible. As soon as that is figured out, I will see if Gene can help me set up a more permanent test fixture to systematically drop beans past the cameras so we can gather a test set from which to build a classifier.

The last time Bob and Gena were here, Bob dropped off his spotting scope for us to try for a little while. We knew we had pretty bad optics on all our cameras, but now we are beginning to understand how bad they really are. The scope he brought by is nothing short of amazing and WAY too addictive. We have all been taking turns. Now if I could only figure out how to take a picture through the thing. I can hardly wait until the eagle comes back.

The last time Bob and Gena were here, Bob dropped off his spotting scope for us to try for a little while. We knew we had pretty bad optics on all our cameras, but now we are beginning to understand how bad they really are. The scope he brought by is nothing short of amazing and WAY too addictive. We have all been taking turns. Now if I could only figure out how to take a picture through the thing. I can hardly wait until the eagle comes back.

Some good news and some good news arrived yesterday. The first is that my participation in the sickle cell disease diagnostic project is wrapping up. I will still be on call for the machine vision elements of the project, but I will not be tasked with the day to day programming any longer. The second is a good friend (Gene C.) I have known since I was a child has agreed to work with me on a side project. We are going to make a “cheap but good” coffee bean inspection machine. There are lots of machines that do that, but none of them are particularly cheap in the way we want our machine to be cheap. We hope to do this for another friend who lives in Dallas.

Some good news and some good news arrived yesterday. The first is that my participation in the sickle cell disease diagnostic project is wrapping up. I will still be on call for the machine vision elements of the project, but I will not be tasked with the day to day programming any longer. The second is a good friend (Gene C.) I have known since I was a child has agreed to work with me on a side project. We are going to make a “cheap but good” coffee bean inspection machine. There are lots of machines that do that, but none of them are particularly cheap in the way we want our machine to be cheap. We hope to do this for another friend who lives in Dallas.

I bought two lights I plan to use for the project. One of them is a back light and one of them is a ring light. I am pretty sure we will not be able to use these in our finished instrument, but they will certainly help me with development of lighting and optics. I still need to buy (at least) a few m12 mount lenses and a cheap USB microscope. I already have a camera with the wrong lens, but it has allowed me to start writing the program I will use to do image processing and classification algorithm development. I got it to take pictures before I went to bed last night.

I bought two lights I plan to use for the project. One of them is a back light and one of them is a ring light. I am pretty sure we will not be able to use these in our finished instrument, but they will certainly help me with development of lighting and optics. I still need to buy (at least) a few m12 mount lenses and a cheap USB microscope. I already have a camera with the wrong lens, but it has allowed me to start writing the program I will use to do image processing and classification algorithm development. I got it to take pictures before I went to bed last night.