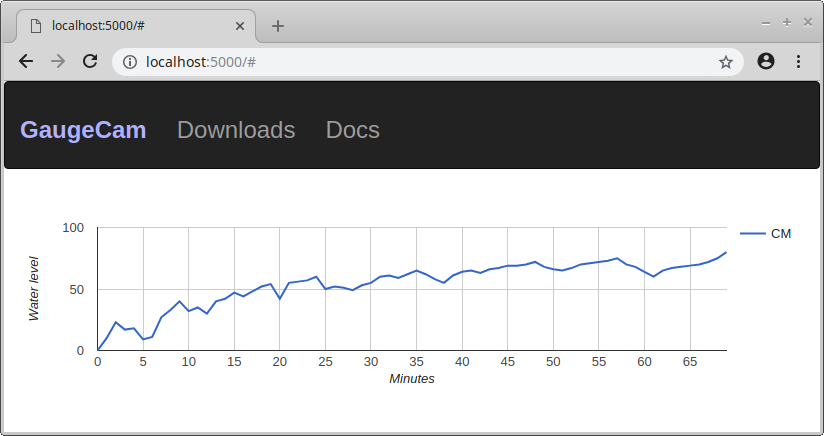

One positive outcome of this new effort to get a PhD in my retirement years is the impetus it will give to the spinning back up of the GaugeCam project as a wholly open source project (free as in freedom and free as in beer, as they say). I will be doing the heavy lifting on the software end of the project, taking over the server part and maintaining and improving the client parts. I have started on a very basic web server to show the graphs of the water height and point to our blog and downloads and documentation. I have a bit of a learning curve on this, but am on my way (see above).

Now I just have to figure out a way to include the bean inspection in all this.

Our friend, Bonnie, picked up the computer that arrived at our house when we were off visiting in Boston and Tempe last week. Lorena met with her for lunch yesterday to pick it up. It is a beautiful, brand new, Dell 5491 14″ touchscreen, i7 laptop with all the requisite amounts of memory and drive space to work on the relatively large images with which I work in my job. I love the computer, but it is a hassle to switch all the work I have been doing on the personal computer I used while I waited for my work computer.

Our friend, Bonnie, picked up the computer that arrived at our house when we were off visiting in Boston and Tempe last week. Lorena met with her for lunch yesterday to pick it up. It is a beautiful, brand new, Dell 5491 14″ touchscreen, i7 laptop with all the requisite amounts of memory and drive space to work on the relatively large images with which I work in my job. I love the computer, but it is a hassle to switch all the work I have been doing on the personal computer I used while I waited for my work computer.

Over the last couple of days, I had a couple of long and interesting talks with my old friend, Troy, with whom I worked on the GaugeCam project when we lived in North Carolina. Troy is an Assistant Professor at University of Nebraska right now with lots of interesting research going on. We discussed the idea of me reengaging on some of his research again when I started to approach retirement. Well, retirement is rapidly approaching and it looks like the stars might be starting to align. This is still just wishful thinking, but we have talked about a few specific ideas and I even called and talked to my old Masters degree professor, Carroll Johnson long retired from University of Texas at El Paso. We have hope we can make something happen. If this idea comes to fruition, I hope to be writing about it here on a semi-regular basis.

Over the last couple of days, I had a couple of long and interesting talks with my old friend, Troy, with whom I worked on the GaugeCam project when we lived in North Carolina. Troy is an Assistant Professor at University of Nebraska right now with lots of interesting research going on. We discussed the idea of me reengaging on some of his research again when I started to approach retirement. Well, retirement is rapidly approaching and it looks like the stars might be starting to align. This is still just wishful thinking, but we have talked about a few specific ideas and I even called and talked to my old Masters degree professor, Carroll Johnson long retired from University of Texas at El Paso. We have hope we can make something happen. If this idea comes to fruition, I hope to be writing about it here on a semi-regular basis. The new Ubuntu operating system (Bionic Beaver 18.04 LTS) came out yesterday and I installed it today. So far it is great. I had been using Xubuntu up until now, but have decided I am going to try Ubuntu for awhile and then Linux Mint (Cinnamon) when it comes out for awhile before I decide on where to the settle for the next few years. I am really glad Ubuntu went back to Gnome and a way from Unity. That was the main reason I switched to Xubuntu in the first place–so I did not have to deal with Unity. My good buddy, Lyle W. has been raving about Mint for quite a few years now as have many others, so I think I need to give that a try.

The new Ubuntu operating system (Bionic Beaver 18.04 LTS) came out yesterday and I installed it today. So far it is great. I had been using Xubuntu up until now, but have decided I am going to try Ubuntu for awhile and then Linux Mint (Cinnamon) when it comes out for awhile before I decide on where to the settle for the next few years. I am really glad Ubuntu went back to Gnome and a way from Unity. That was the main reason I switched to Xubuntu in the first place–so I did not have to deal with Unity. My good buddy, Lyle W. has been raving about Mint for quite a few years now as have many others, so I think I need to give that a try.

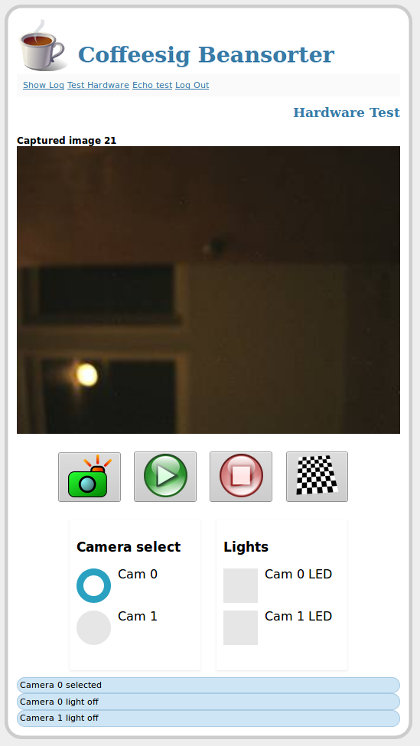

The browser based GUI for the bean sorting project is now up and running and being served from the Raspberry Pi. I only have one camera running right now because I only have one camera, but it does all the things that need to be done. There is a lot underneath the hood on this thing, so it should serve as a good base for other embedded machine vision projects beside this one.

The browser based GUI for the bean sorting project is now up and running and being served from the Raspberry Pi. I only have one camera running right now because I only have one camera, but it does all the things that need to be done. There is a lot underneath the hood on this thing, so it should serve as a good base for other embedded machine vision projects beside this one. You can say a lot of things about this image–it is blurry, it is too dark, it manifests the starry night problem, etc., etc. Still, it is our first image out of the bean sorter cam connected to a Raspberry Pi. I am going to do some infrastructure stuff to be able to pull stuff down easily from the embedded computer, but I will be moving on to work on the lights Gene sent me within a few days. Of course those days extend out quite a bit because I have a day job. Nevertheless, one has to take their satisfaction when they can get it and this is satisfaction any engineer might understand.

You can say a lot of things about this image–it is blurry, it is too dark, it manifests the starry night problem, etc., etc. Still, it is our first image out of the bean sorter cam connected to a Raspberry Pi. I am going to do some infrastructure stuff to be able to pull stuff down easily from the embedded computer, but I will be moving on to work on the lights Gene sent me within a few days. Of course those days extend out quite a bit because I have a day job. Nevertheless, one has to take their satisfaction when they can get it and this is satisfaction any engineer might understand.

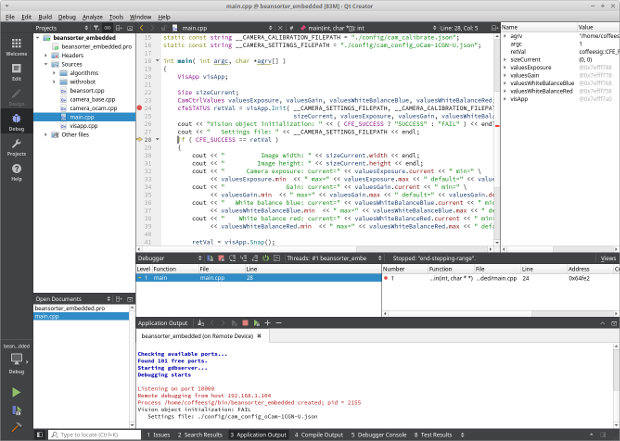

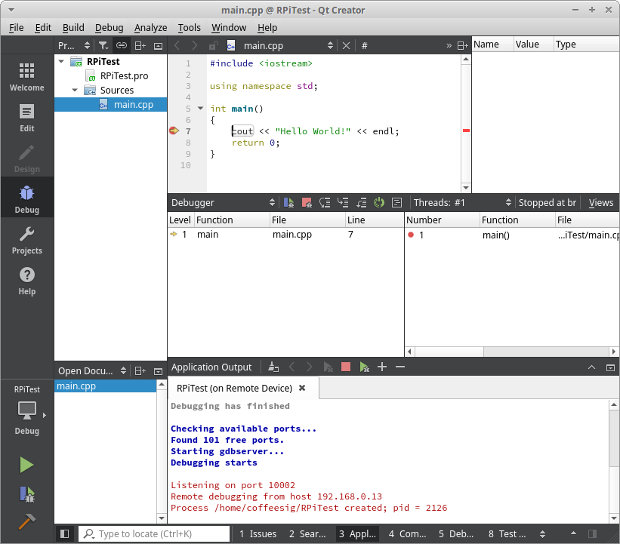

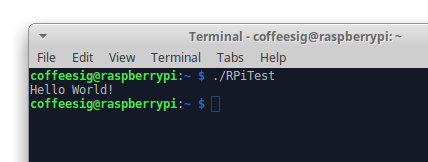

I got up to my office about 7:00 AM this morning and have been programming steadily since then. Well, I call it programming. Really what I was doing was trying to figure out how to get Raspberry Pi programs I write and build on my laptop (that I use as a desktop) to cross compile with Qt Creator so they will run on the Raspberry Pi which is what we started with on our coffee bean sorting project because it is cheap and we are cheap. I finally got it all to work about 12 hours later. I am wildly happy to have the bulk of this out of the way. Now I can bet back to thinking about coffee beans. Now the program I compiled previously on the Raspberry Pi should be fundamentally easier to debug.

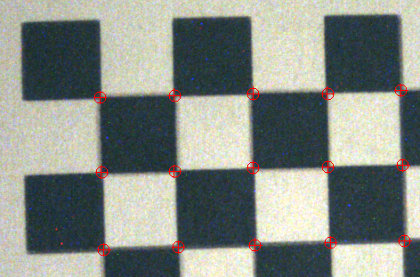

I got up to my office about 7:00 AM this morning and have been programming steadily since then. Well, I call it programming. Really what I was doing was trying to figure out how to get Raspberry Pi programs I write and build on my laptop (that I use as a desktop) to cross compile with Qt Creator so they will run on the Raspberry Pi which is what we started with on our coffee bean sorting project because it is cheap and we are cheap. I finally got it all to work about 12 hours later. I am wildly happy to have the bulk of this out of the way. Now I can bet back to thinking about coffee beans. Now the program I compiled previously on the Raspberry Pi should be fundamentally easier to debug. Yesterday, I spent my spare time on creating a camera calibration for our bean sorter project. The purpose of the calibration is to convert measurements of beans in captured images from pixel units to mm units. Images are made up of pixels, so when measurements are performed we know how big things are in terms of pixels. Something might be 20 pixels wide and 17.7 pixels high (subpixel calculations is a topic for another day). Knowing the width of something in an image is pretty worthless because the real world width ( e.g. in millimeters) of that object will vary greatly based on magnification, camera angle and a bunch of other stuff. That is a big problem if the camera moves around a lot.

Yesterday, I spent my spare time on creating a camera calibration for our bean sorter project. The purpose of the calibration is to convert measurements of beans in captured images from pixel units to mm units. Images are made up of pixels, so when measurements are performed we know how big things are in terms of pixels. Something might be 20 pixels wide and 17.7 pixels high (subpixel calculations is a topic for another day). Knowing the width of something in an image is pretty worthless because the real world width ( e.g. in millimeters) of that object will vary greatly based on magnification, camera angle and a bunch of other stuff. That is a big problem if the camera moves around a lot. position where through which the beans will fall. In our case that calibration target is a checkerboard pattern with squares of a known size. If we take a picture of the checkerboard pattern, then find the location of each square in the image in pixels, and store that information away.

position where through which the beans will fall. In our case that calibration target is a checkerboard pattern with squares of a known size. If we take a picture of the checkerboard pattern, then find the location of each square in the image in pixels, and store that information away. Gene and I continue to make progress on our bean inspection project. Here is the first pass at measuring bean size as beans drop past the camera. This includes finding the bean in the image, calculating its contour and measuring how big it is in the image. The next step is to convert the bean size in the image measured in pixel units to the size in millimeters. I am half way into that.

Gene and I continue to make progress on our bean inspection project. Here is the first pass at measuring bean size as beans drop past the camera. This includes finding the bean in the image, calculating its contour and measuring how big it is in the image. The next step is to convert the bean size in the image measured in pixel units to the size in millimeters. I am half way into that. I bought a global shutter camera from

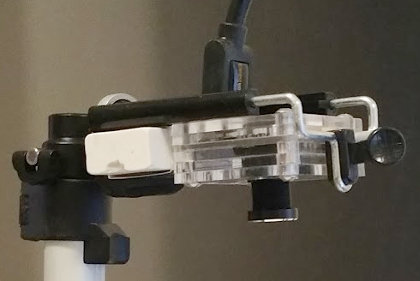

I bought a global shutter camera from  Yesterday I bought a machine vision camera for the project my buddy Gene and I are doing to build a (semi-)cheap little machine to inspect coffee beans. We need something called a global shutter camera because the beans will be in motion when we capture their images. In the past a camera like this would have cost in the $1000 range. Over the years they dropped to $300-$400. Yesterday, I paid $135 for this camera–quantity 1–and that included shipping. If this is coupled with a

Yesterday I bought a machine vision camera for the project my buddy Gene and I are doing to build a (semi-)cheap little machine to inspect coffee beans. We need something called a global shutter camera because the beans will be in motion when we capture their images. In the past a camera like this would have cost in the $1000 range. Over the years they dropped to $300-$400. Yesterday, I paid $135 for this camera–quantity 1–and that included shipping. If this is coupled with a  Some good news and some good news arrived yesterday. The first is that my participation in the sickle cell disease diagnostic project is wrapping up. I will still be on call for the machine vision elements of the project, but I will not be tasked with the day to day programming any longer. The second is a good friend (Gene C.) I have known since I was a child has agreed to work with me on a side project. We are going to make a “cheap but good” coffee bean inspection machine. There are lots of machines that do that, but none of them are particularly cheap in the way we want our machine to be cheap. We hope to do this for another friend who lives in Dallas.

Some good news and some good news arrived yesterday. The first is that my participation in the sickle cell disease diagnostic project is wrapping up. I will still be on call for the machine vision elements of the project, but I will not be tasked with the day to day programming any longer. The second is a good friend (Gene C.) I have known since I was a child has agreed to work with me on a side project. We are going to make a “cheap but good” coffee bean inspection machine. There are lots of machines that do that, but none of them are particularly cheap in the way we want our machine to be cheap. We hope to do this for another friend who lives in Dallas. I bought two lights I plan to use for the project. One of them is a back light and one of them is a ring light. I am pretty sure we will not be able to use these in our finished instrument, but they will certainly help me with development of lighting and optics. I still need to buy (at least) a few m12 mount lenses and a cheap USB microscope. I already have a camera with the wrong lens, but it has allowed me to start writing the program I will use to do image processing and classification algorithm development. I got it to take pictures before I went to bed last night.

I bought two lights I plan to use for the project. One of them is a back light and one of them is a ring light. I am pretty sure we will not be able to use these in our finished instrument, but they will certainly help me with development of lighting and optics. I still need to buy (at least) a few m12 mount lenses and a cheap USB microscope. I already have a camera with the wrong lens, but it has allowed me to start writing the program I will use to do image processing and classification algorithm development. I got it to take pictures before I went to bed last night.

I will have one more work week in Texas after today. I enjoy my job and the people where I work a lot and it was agonizing to turn in my notice. Part of the job I love the most is the requirement to create sophisticated machine vision and video analytics applications with cheap USB cameras and ARM embedded computers that run embedded Linux, usually Debian. We prototype a lot of the stuff on Raspberry Pi’s which is great because there is such a big user community it is easy to quickly get answers about just about anything. There are four cameras in the article accompanying this post that range in value between $20 and $50.

I will have one more work week in Texas after today. I enjoy my job and the people where I work a lot and it was agonizing to turn in my notice. Part of the job I love the most is the requirement to create sophisticated machine vision and video analytics applications with cheap USB cameras and ARM embedded computers that run embedded Linux, usually Debian. We prototype a lot of the stuff on Raspberry Pi’s which is great because there is such a big user community it is easy to quickly get answers about just about anything. There are four cameras in the article accompanying this post that range in value between $20 and $50.