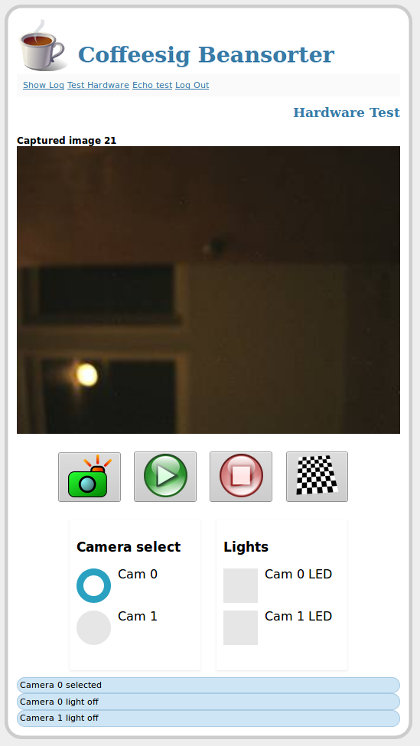

The browser based GUI for the bean sorting project is now up and running and being served from the Raspberry Pi. I only have one camera running right now because I only have one camera, but it does all the things that need to be done. There is a lot underneath the hood on this thing, so it should serve as a good base for other embedded machine vision projects beside this one.

The browser based GUI for the bean sorting project is now up and running and being served from the Raspberry Pi. I only have one camera running right now because I only have one camera, but it does all the things that need to be done. There is a lot underneath the hood on this thing, so it should serve as a good base for other embedded machine vision projects beside this one.

In terms of particulars, I am using a Flask (Python3)/uWSGI/nginx based program that runs as a service in the Raspberry Pi. Users access this service wirelessly (anywhere from the internet). The service passes these access requests to the C++/OpenCV based vision application which is also running as a service on the Raspberry Pi. Currently, we can snap images show “live” video, read the C++ vision log, and do other such tasks. We probably will use something other than a Raspberry Pi for the final product with a USB 3.0 port and the specific embedded resources we need, but the Raspberry Pi as been great for development and will do a great job for prototypes and demonstration work.

The reason I put the “live” of “live” video in scare quotes is that I made the design decision not to stream the video with gstreamer. In the end applications I will be processing 1 mega-pixel images at 20-30 frames per second which is beyond the bandwidth available for streaming at any reasonable rate. The purpose of the live video is for camera setup and to provide a little bit of a reality check at runtime by showing results for each 30th to 100th image as a reality check along with sort counts. There is no way we could stream the images at processing rates and we want to see something better than the degraded streamed image.

Leave a Reply